5 Mistakes You Should Avoid When A/B Testing Your Designs - Six Revisions |

| 5 Mistakes You Should Avoid When A/B Testing Your Designs Posted: 26 Aug 2013 03:00 AM PDT A/B testing can help improve your web designs. Even a small tweak such as changing the location of your call-to-action button can increase sales by 35.6%. Performing A/B tests will let you verify that your design changes lead to better results.

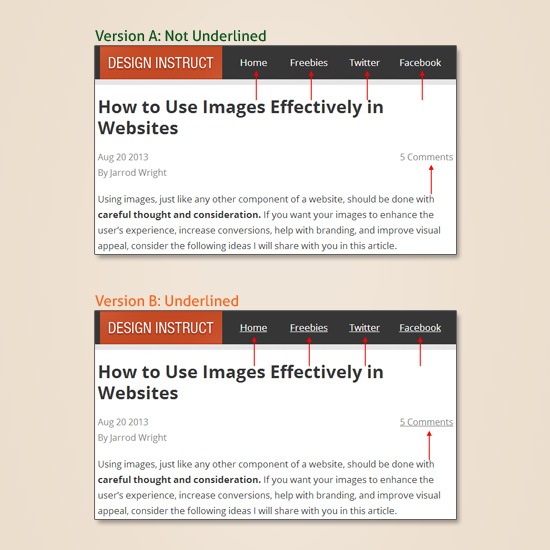

As a web designer who strives to create high-performing websites, you probably already know about A/B testing. But in case this is the first time you have heard of A/B testing (which is also called split testing sometimes), read this introductory guide first: An Introduction to Website Split Testing. Though A/B testing seems like a no-brainer — especially with the huge array of user-friendly tools out there like Optimizely, Google Analytics Content Experiments, and Visual Website Optimizer (the company I work for) — there are a few common mistakes that will lead you towards unreliable results. These A/B testing mistakes could spell disaster for a website because they have the potential to lower a site’s conversion rates. Making the A/B testing mistakes I’ll be talking about below would, at the very least, mean you’re not going to get the best data you can get for making informed design decisions. Mistake #1: Using A/B Tests When You Really Shouldn’tA/B testing is best used when you want to test different two versions of one variable. For example, if you want to find out if your hyperlinks get clicked on more if they were underlined versus if they weren’t, you can create two versions of a web page: One version with links underlined (version A), and one version where links aren’t underlined (version B). The variable being tested is the hyperlink’s text decoration (underlined vs. not underlined).

In this case, you can effectively use A/B testing to see which version is better. But for testing more than one variable, you should use multivariate tests (MVT). Continuing on with our hyperlink example, you should use multivariate testing if you want to find the best combination for these 3 variables:

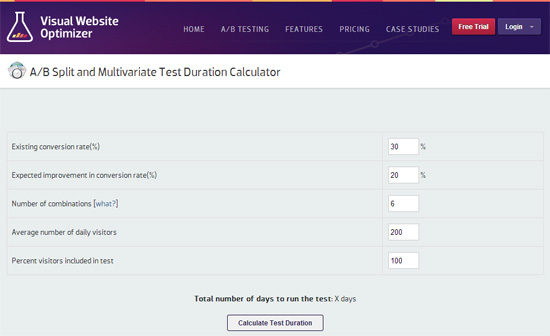

In the case above, there are 18 (2x3x3) different versions you need to test in order for you to get the best results. Why would you want to test 18 different versions? There could be an interaction between these 3 variables that could affect conversion performance. For instance, green-colored hyperlinks may perform better than blue-colored hyperlinks only if the green hyperlinks were bolded and not underlined. But the green hyperlinks could perform worse than blue hyperlinks when the green hyperlinks are italicized and underlined. However, multivariate testing requires site traffic to be divided among the different versions being tested, so I recommend using this testing method only when the web page you are testing already receives a good amount of traffic. Otherwise, the test will take too long to finish in order for you to get definitive results. Websites with low traffic should choose A/B tests because it would be the most practical choice in the case of low site traffic. Mistake #2: Ignoring the Sample SizeSo you have just set up an A/B test on your website. Two hours later, you get statistically confident results indicating that the web design version that has a blue call-to-action button is showing 300% improvement in sign-ups. You jump with joy because of this newfound discovery, and now you will be committing the change site-wide. Hold your horses! Because the testing period seems too short, the scenario above is indicative of an A/B test that was administered on an insufficient sample size of visitors. For those of us who aren’t stats junkies, sample size in this context is the amount of website visitors being tested. An insufficient sample size means that the sample size is statistically too small to be an accurate representation of the entire population (entire population, in this context, means all website visitors). When your site receives 50,000 visitors a month, a test that was run on a sample size of only 30 visitors (which is only 0.06% of the monthly site visits) won’t get you conclusive results. The positive increase in performance in these scenarios might simply be due to chance/coincidence. When you implement a change on your site based on an insufficient sample size, you might soon realize that your conversion rates aren’t increasing as you had expected. Or worse: In some cases, it might even decrease. Why? If you have an insufficient sample size, the improvement you saw during the testing period might have only been by chance and there’s a possibility that, in reality, the version showing a positive improvement is actually worse when tested on an appropriate sample size. To avoid this mistake, you will need to determine the appropriate sample size for your test. You can use Visual Website Optimizer’s free test duration calculator.

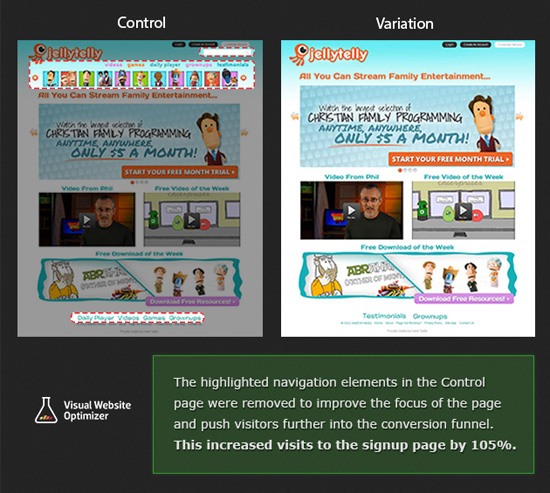

The tool will tell you how many days you need to run the test for so that you can receive reliable results. The calculation is based on the number of versions you’re going to test, your website traffic, your current conversion rate, etc. Mistake #3: Focusing on Only One Website MetricThere are several conversion goals you can use as metrics for success, such as click-through rate, the number of sign-ups you get on your web app, shopping cart abandonment rate, and so forth. You will often see that there is an interaction between your different website goals and metrics, and thus, using data you gather via A/B tests to improve only one of your metrics could negatively impact other important metrics. For instance, using a design version that improves the click-through rate of your call-to-action button might consequentially affect the number of sign-ups you get. Sometimes a change in the web design that decreases one metric is alright if it significantly improves a more important metric, such as a decrease in click-through rate is alright if you’re getting significantly more sign-ups. You should have complete knowledge of every metric that’s being affected with your changes so that you can make an informed design decision based on your project’s priorities. Mistake #4: Not Segmenting Your TestsOver at Visual Website Optimizer, one of our customers ran a test to see if removing some navigation elements from their homepage could increase conversions.

The customer segmented the test in such a way that only new visitors on the site would be tested. This made perfect sense because this was a video streaming website, much like Netflix. So, a good number of returning visitors on the site were people who were already their customers. Since the site owner was most interested in seeing how to convert new site visitors to customers, it was a great idea to segment the A/B test. Runing the test for all visitors on the site would have been a mistake; doing so would have skewed the results because testing all visitors would mean having a data sample that included visitors who have already gone through the conversion funnel and are already customers. You can read the complete case study here. Segment your A/B test if it can lead to better results for the particular test you’re running. Mistake #5: Choosing Aesthetics Over ResultsThis is one of the biggest initial struggles I see designers face when they start A/B testing their work. Here is a hypothetical scenario (exaggerated for discussion purposes): The designer believes the stock photo she picked looks beautiful with the design. She runs an A/B test to verify that it’s a good design choice. She creates two versions to test: One with the stock photo (version A), and one without (version B).

The designer discovers that the web design gets more clicks on the "Buy Now" button on version B, the version without the stock photo. However, version B looks "ugly" in the designer’s opinion. What does she do now? Ignore the results and go with the flower photo, choose another stock photo, or make an informed design decision and revise the design so that it looks better without a stock photo? Once you get into the groove of testing, you will get the hang of achieving a good balance between design aesthetics and test data. Sometimes the site may not look as pretty as you want it to, but if it doesn’t look absolutely horrendous, choose the design that performs better. After all, websites are tools. The purpose of a website is not to look pretty, but to achieve certain objectives like increasing online sales or getting more pageviews. Regardless of how a web design looks, the design that is better at achieving the website’s goals should be the one that should be implemented. Related Content

About the AuthorThe post 5 Mistakes You Should Avoid When A/B Testing Your Designs appeared first on Six Revisions. |

| You are subscribed to email updates from Six Revisions To stop receiving these emails, you may unsubscribe now. | Email delivery powered by Google |

| Google Inc., 20 West Kinzie, Chicago IL USA 60610 | |

No comments:

Post a Comment